TL;DR

- Dr. Uri Hasson’s lab has conducted experiments using natural stimuli for over a decade.

- The lab has been using “inter-subject correlation” and “inter-subject functional connectivity” to reveal the synchronization of brain activity between individuals and between brain regions in response to complex scenarios.

- One such study showed that synchronization between students and experts predicted learning outcomes. I was a part of the team and helped carried out this longitudinal fMRI project designed by Dr. Meir Meshulam. This study was published in Nature Communications.

- I curated the past data collected by Dr. Hasson’s lab and compiled them into a dataset. With the help of Dr. Samuel Nastase, this dataset was made available on Scientific Data.

- As a research specialist in Dr. Hasson’s lab, I trained a shared-response model on the the inter-subject functional connectivity matrices derived from curated data set. I showed that projecting neural time course data to the shared space enhances the classification accuracy of individual scenes in a story.

- My work pointed to the possibility of a universal shared-response model. Dr. Samuel Nastase conducted further analysis and showed the feasibility of this idea. This project was published on NeuroImage.

Inter-subject correlation (ISC): revealing the synchrony of neural activity between people

Have you ever listened to a song that other people also listen to? Or have you ever watched the same movie as someone else? Well, this is a trivial question for citizens of this highly inter-connected information society like you and I. Gangnam Style, Call Me Maybe, Bad Romance… these are some songs I remember as international ultra super hits. It’s likely that you have also heard their melodies. If you have never heard them, that’s fine, there must be other songs in our shared experience. What about Twinkle Twinkle Little Star or Jingle Bell? The point is, we share more or less the same cultural context. Sometime in our lives, we must have listened to at least one song that someone else also knows.

The primary auditory cortex in our brain is a region that more or less faithfully represents incoming audio signals. When different people listen to the same song or story, the activity in the primary auditory cortex should be very similar. As a result, if we take the activity time courses (or waveforms) in the primary auditory cortex from two people listening to the same song, they should be highly correlated. We say the song induces high inter-subject correlation in the primary auditory cortex.

This is the idea underlying the “inter-subject correlation (ISC)” analysis.

In so-called higher-order regions in the brain, the neural time-courses are not replications of the perceived events. Instead, they may track the feelings, interpretations, or predictions for the events a person is experiencing. Because feelings, interpretations, and predictions can vary across individuals, how correlated the time-courses are between people may reflect how universal the response to an experience is. If a story is very explicit and obviously happy, we may observe high inter-subject correlation in these higher-order regions. On the contrary, if a story is ambiguous, the ISC value can be low, depending on whether different people interpret the same story in a similar way.

Dr. Uri Hasson in Princeton University is prominent cognitive neuroscientist who uses ISC and its derivatives to study the neural activity underlying everyday human behaviors. In an interesting study done by the team led by Dr. Hasson, participants listened to the story:

“Pretty Mouth and Green My Eyes” by J. D. Salinger

In the story, a man called his friend to ask if the friend knew where the man’s wife was. On the other end of a phone, a woman of unknown identity was lying on the bed of this friend. Prior to listening to the story, half of the participants learned that the story they were going to hear was about a nervous husband and a cheating wife. The other half of the participants learned that the story was going to be about a paranoid husband and a faithful wife.

Because both groups of participants listened to the same story, the neural response waveform in the primary auditory cortex was unsurprisingly similar across groups. However, the waveforms in higher cognitive regions such as the “precuneus“, the “temporo-parietal junction“, and the “dorsal medial prefrontal cortex” are synchronized within groups but quite different across groups. This study used ISC to reveal the brain system that reflects the difference in interpretation and believes among people, despite the same perceptual input.

High ISC in higher cognitive regions is also an indicator of successful transmission of information. Another study led by Dr. Meir Meshulam, a member of Dr. Hasson’s lab, showed that in an introductory computer science course, high ISC values between a student and experts like course TAs is correlated with better learning outcomes. This was a longitudinal study in which the same group of 24 students completed 6 functional magnetic resonance imaging (fMRI) scans throughout a semester. I’m proud to have been a part of the team that carried out this ambitious project.

Using “natural stimuli”

An audio-recorded story is a natural stimulus. A movie is a natural stimulus. Natural stimuli are complex things like these that we may encounter in our daily life. For people who are not doing scientific research, the idea of “natural stimuli” may be trivial — after all, what should an “unnatural” stimulus be like?

In fact, in psychological studies, “unnatural” stimuli are used all the time, though they are not called “unnatural”. Instead, they are called “reductionistic” stimuli. Scientists break down complex stimuli to isolate specific aspect(s) of the stimuli they want to study. Then, they create artificial stimuli that exhibit only the desired aspect(s) (e.g., color), plus a minimal amount of other aspects (e.g., shape, size). Such philosophy of stimuli design allows scientists to exert “maximal control over as many variables as possible“. As a result, in psychological studies, participants usually see simple geometric shapes with solid colors and hear beeps with pure tones.

Scientists has been using reductionistic stimuli to reveal many important working principles of the mind and brain. However, it’s difficult to extrapolate the brain responses in everyday contexts from the brain responses to simplistic stimuli. Seeing short animation featuring drifting geometric shapes is different from watching a movie. Hearing sequences of beeps and boops is also different from listening to a symphony. One of the subjects difficult or even impossible to study with reductionistic experiment designs is social interaction.

If we want to study brain reaction to intricate social interactions, just let the participants listened to a story or watch a movie rich in such interactions!

For over a decade, the lab of Dr. Hasson conducted more than a dozen fMRI experiments with natural stimuli and collected data from hundreds of participants. The stimuli used in these experiments were auditory stories with a wide range of contents, including “Pretty Mouth and Green My Eyes” mentioned earlier. Some were publicly available shows. Pie Man, by Jim O’Grady. Tunnel Under the World, by Frederick Pohl. Slumlord, by Jack Hitt. And more. Others were stories created for the lab and narrated by professional storytellers. Still others were recordings of laypeople recalling a TV show they had just watched in the lab (such as an episode from Sherlock by BBC).

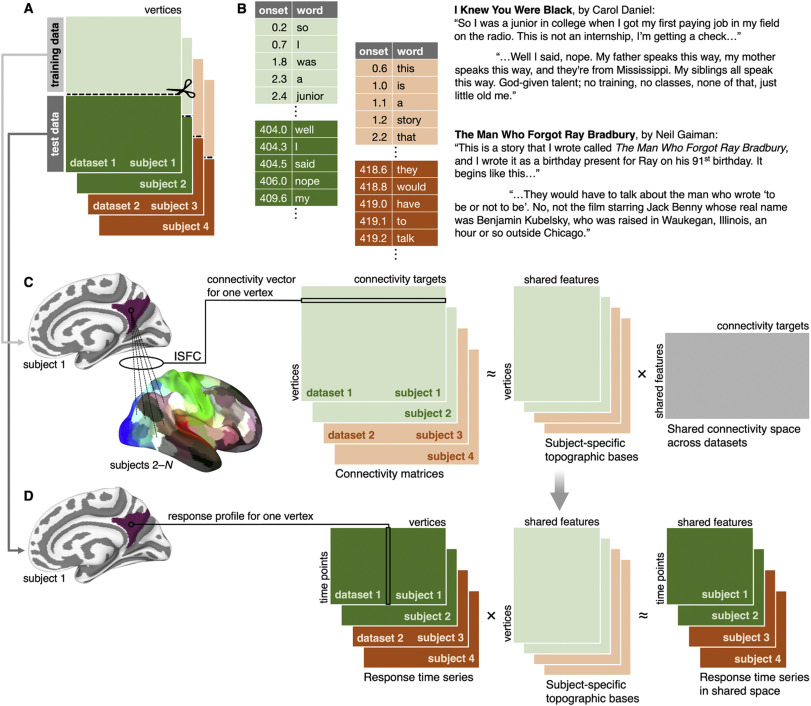

When I worked as a research specialist in Dr. Hasson’s lab, I retrieved the data associated with these experiments from every cranny of the lab server and compiled them. As a curator of the dataset, I re-assessed the quality of the audio stimuli and the data and generated a catalog for them. With the help of Dr. Samuel Nastase, another member of the Hasson Lab, the compiled dataset was made available to interested researchers, along with a publication on Scientific Data describing it.

Inter-subject functional connectivity and shared response model

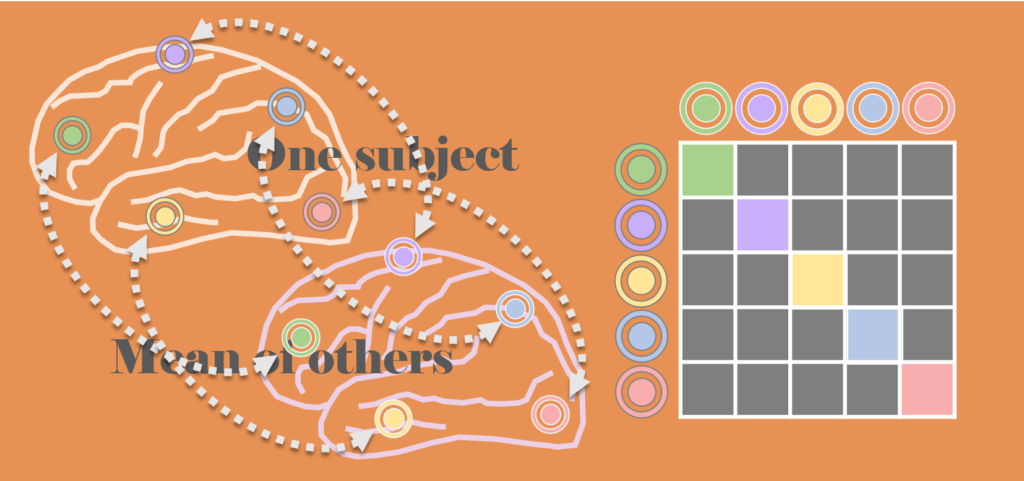

In inter-subject correlation analysis, in practice, we compute the correlation between one participant and the average of all the other participants. We do such computation in each of the brain regions of interest. So, for each participant, we derive one value for each region in their brain.

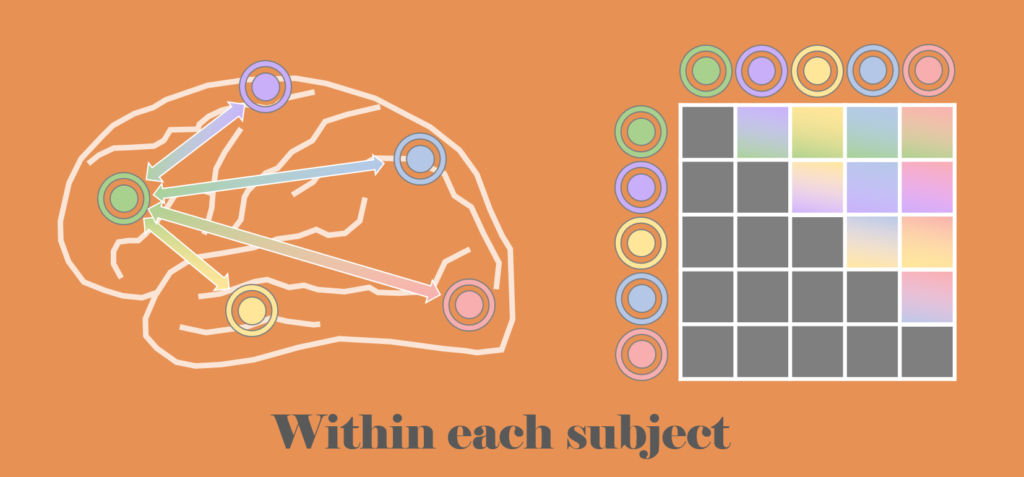

We also often compute, within each participant, the correlation between one brain region and all the other brain regions. We call this method “functional connectivity (FC)“. FC reveals which brain regions work in synchrony in response to a task. Brain regions which work in synchrony are considered a network.

When we combine the ISC and the FC, we get the “inter-subject functional connectivity (ISFC)” method. In ISFC, we compute the correlation between one brain region in one participant and all the brain regions in the average brain of all the other participants. The ISFC enhances the FC by eliminating the possible idiosyncratic synchrony intrinsic to each participant. After computing ISFC, for each brain region in a participant, we derive a collection of correlation values. There are as many of these correlation values as the number of brain regions of interest. Suppose there are in total N brain regions. Since each region now has a sequence of N ISFC values, we can stack the N sequences to form an N-by-N ISFC matrix.

It’s conceptually not too hard to assume that each event in a story gives rise to a specific connectivity pattern. That is, a specific ISFC matrix. Is it possible that we learn from the association between an story and the corresponding ISFC matrix, then identify a previously unseen event based on its ISFC matrix?

This is where the shared-response model (SRM) kicks in

The shared-response model (SRM) takes as input the ISFC matrices from a group of participants. It returns two types of outputs.

- A “shared connectivity space” represented as a matrix. The dimension of the matrix is “the number of shared features” x “the number of brain regions of interest”

- Participant-specific projection matrices. Each of these projection matrices modifies the shared connectivity space to yield the ISFC matrix of the corresponding participant. The dimension of these matrices is “the number of brain regions of interest” x “the number of shared features”.

A “shared feature” is an aspect of the input data that the SRM algorithm found essential for the interpretation of the input ISFC matrices. The number of features is determined before the execution of the SRM program. However, the “meaning” of these features are largely obscure to us. Some features may reflect the social aspect of the story, some may reflect the physical property of the audio stimuli, but the algorithm doesn’t yield such information. We have to take extras steps if we want to find out the meanings of the features. Mathematically speaking, each of these features is a linear combination of the brain regions of interest. As for why they are combined as such, it is determined by the algorithm agnostic of the underlying meaning of the data. For the mathematical details of the SRM algorithm, interested readers may refer to this particular paper.

The bottom line is that, the SRM algorithm finds out the shared connectivity pattern across people (the shared connectivity space) and the fashion by which the connectivity pattern of each person differs from the shared pattern (the projection matrices).

Application of the ISFC-based SRM

Another way to understand the projection matrices is to regard them as a table of each brain region’s weight on each feature.

Suppose feature 1 is “social”, feature 2 is “physical”, and feature 3 is “emotional”. By inspecting the projection matrices of an individual, we may find that brain region 1 values the social aspect of the story but not physical, while brain region 2 values the emotional aspect, followed by the physical aspect, and not the social aspect. We may also find that the same region 1 and region 2 of another person weigh the same 3 features differently.

We can use these projection matrices to convert the time courses of all the brain regions in a participant to the time courses of the features. If such conversion takes place, we may observe the ebb and flow of each feature as the story progresses. For example, if the story features a monologue during some time, we may see the time course of the “social” feature swings to its lowest point.

We can also use the time courses of the features to identify specific segments of the story.

This was the main project I worked on when I was in Dr. Hasson’s lab.

I took the fMRI data generated with some of the longest stories and split each time course in half. I used the ISFC matrices derived from the first half to train an SRM and acquire the individual projection matrices. Then, I chunked up the second half into 25 segments. Next, I converted these segments of brain region time courses to feature time courses. Lastly, I used the ranked-based classification method to show there was a 66.6% chance of identifying a specific segment out of the 25.

This performance was tremendously better than random guess (4% accuracy). It was even better than the same classification done with brain region time courses (as opposed to feature time courses), which yields an accuracy of 54.4%.

There is a benefit of training SRM with ISFC matrices rather than time course matrices: we may train an SRM across different experiments done with different stories. The stories are different in length. Therefore, the “brain region” x “time points” matrices are different in size across experiments. The SRM algorithm can’t take matrices of different sizes as input. On the contrary, if we first compute the ISFC matrices from the data of each experiment, all matrices have the same shape of “brain region” x “brain region”. It thus becomes possible for the SRM algorithm to work on these matrices.

My work in Princeton pointed to the possibility of the construction of such a

universal shared response model

which integrates the data from all the past experiments. Dr. Samuel Nastase carried this idea a step further and showed that it is feasible, and it yields meaningful results. Based on my work, he conducted follow-up analysis, and wrote a fantastic journal article describing this grand project. I greatly appreciate his effort which introduced my endeavor in Dr. Hasson’s lab to the scientific community.

Related scientific publications

- Nastase, S. A., Liu, Y.-F., Hillman, H., Norman, K. A., & Hasson, U. (2020). Leveraging shared connectivity to aggregate heterogeneous datasets into a common response space. NeuroImage

- Nastase, S. A., Liu, Y.-F., Hillman, H., Zadbood, A., Hasenfratz, L., Keshavarzian, N., . . . Hasson, U. (2021). The “Narratives” fMRI dataset for evaluating models of naturalistic language comprehension. Scientific Data

- Meshulam, M., Hasenfratz, L., Hillman, H., Liu, Y.-F., Nguyen, M., Norman, K. A., & Hasson, U. (2021). Neural alignment predicts learning outcomes in students taking an introduction to computer science course. Nature Communications

- Chen, P.-H., Chen, J., Yeshurun, Y., Hasson, U., Haxby, J., & Ramadge, P. J. (2015). A Reduced-Dimension fMRI Shared Response Model. Advances in Neural Information Processing Systems 28

- Yeshurun, Y., Swanson, S., Simony, E., Chen, J., Lazaridi, C., Honey, C. J., & Hasson, U. (2017). Same Story, Different Story. Psychological Science

- Simony, E., Honey, C. J., Chen, J., Lositsky, O., Yeshurun, Y., Wiesel, A., & Hasson, U. (2016). Dynamic reconfiguration of the default mode network during narrative comprehension. Nature Communications

- Chen, J., Leong, Y. C., Honey, C. J., Yong, C. H., Norman, K. A., & Hasson, U. (2017). Shared memories reveal shared structure in neural activity across individuals. Nat Neurosci